AI image generators are among the most powerful creative tools ever released to the public. With a simple text prompt, systems like DALL-E, Midjourney, Stable Diffusion, and Flux can generate images that rival professional photography and illustration.

Yet almost all mainstream AI image generators are heavily censored. Prompts are blocked, outputs are filtered, and entire categories of imagery are restricted. To many users, this feels arbitrary or overly paternalistic—but the reasons are more complex than they first appear.

The Short Answer: Risk, Liability, and Scale

AI image generators are censored because they scale risk faster than any previous creative tool. A single user can generate thousands of realistic images per hour, and those images can be misused in ways that are difficult—or impossible—to undo.

Censorship is not primarily about creativity. It is about preventing harm at industrial scale.

The Core Reasons AI Image Generators Are Censored

1. Explicit and NSFW Content

One of the earliest and most obvious uses of image generators was the creation of pornographic or sexually explicit images. Unlike traditional art tools, AI can:

- Instantly generate explicit imagery of realistic humans

- Mimic specific body types, ages, or appearances

- Be used anonymously and at massive volume

For companies operating public platforms, this creates legal and ethical exposure—especially around non-consensual imagery and age-related content. As a result, most hosted AI tools implement aggressive content filters that block nudity, sexual acts, and erotic prompts outright.

2. Deepfakes and Identity Abuse

Modern image models can generate people who look real, or worse—people who look like specific real individuals.

This raises serious concerns:

- Fake images of public figures can be used for defamation

- Realistic portraits can be weaponized for harassment or blackmail

- Non-consensual deepfake imagery can cause permanent reputational harm

Because AI images are increasingly indistinguishable from real photographs, platforms are under pressure to prevent their tools from becoming engines for mass impersonation.

3. Misinformation and Trust Erosion

AI image generators can fabricate events that never happened:

- Fake protests

- Fake crimes

- Fake news photos

- Fake evidence

When such images circulate online, they erode public trust—not just in media, but in photography itself. This is why many generators restrict prompts involving real-world events, politics, or identifiable individuals.

4. Platform Liability and Brand Risk

Companies like OpenAI and Midjourney are not just building tools—they are running services. That means they are legally and financially responsible for what their users create on their infrastructure.

From a business standpoint:

- One viral misuse incident can trigger regulation

- App stores can delist platforms that allow NSFW content

- Payment processors can refuse service

Censorship is often less about ethics and more about survival.

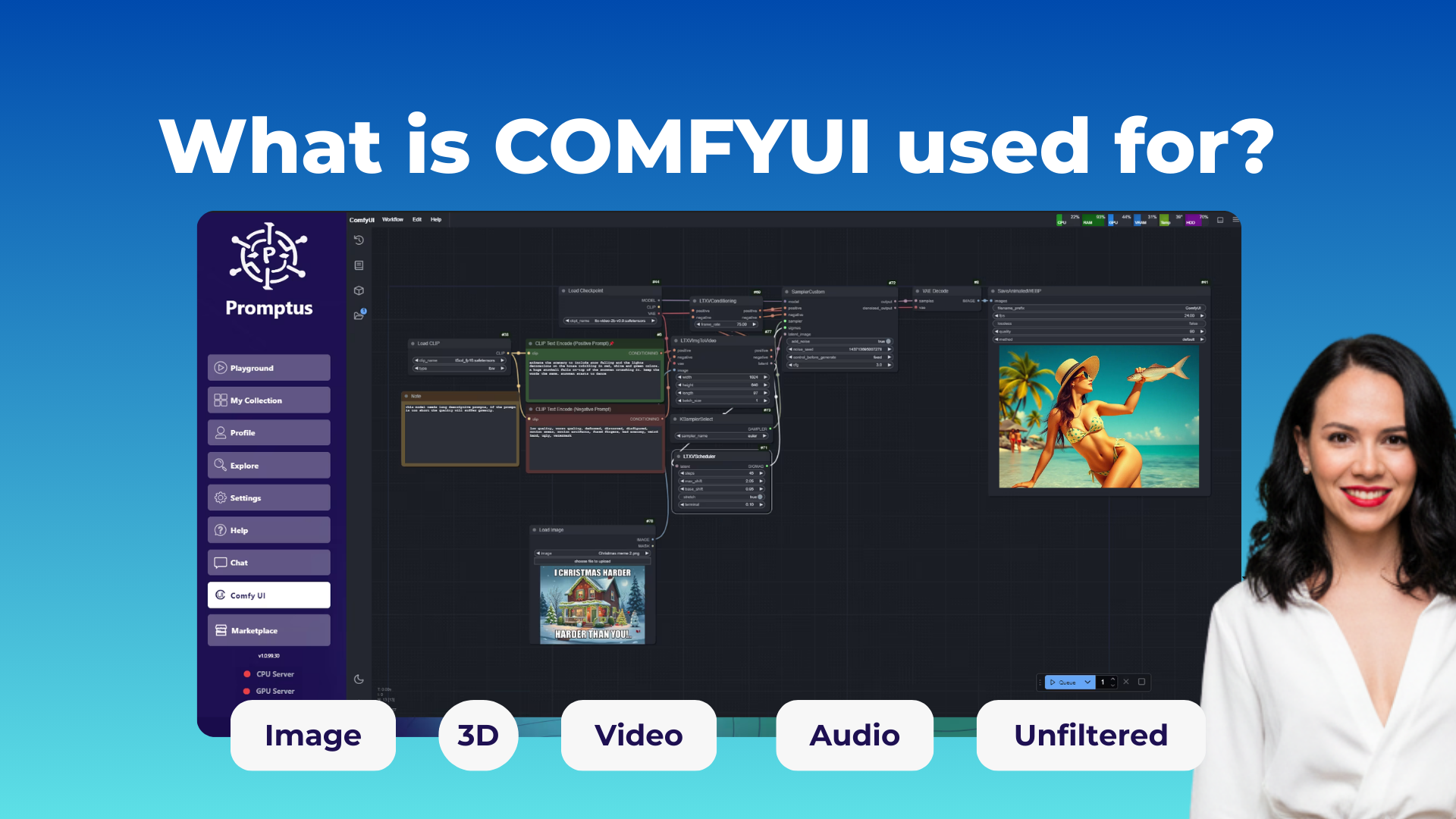

Why Local Tools Like Promptus Are Different

This is where tools like the Promptus Desktop App enter the conversation.

Promptus does not host your images or prompts on a centralized platform. Instead, it acts as a local interface for running models directly on your machine. This fundamentally changes the censorship equation.

- There is no public feed to moderate

- No shared platform reputation to protect

- No centralized content liability in the same way

As a result, censorship is determined mostly by the model you choose, not by Promptus itself.

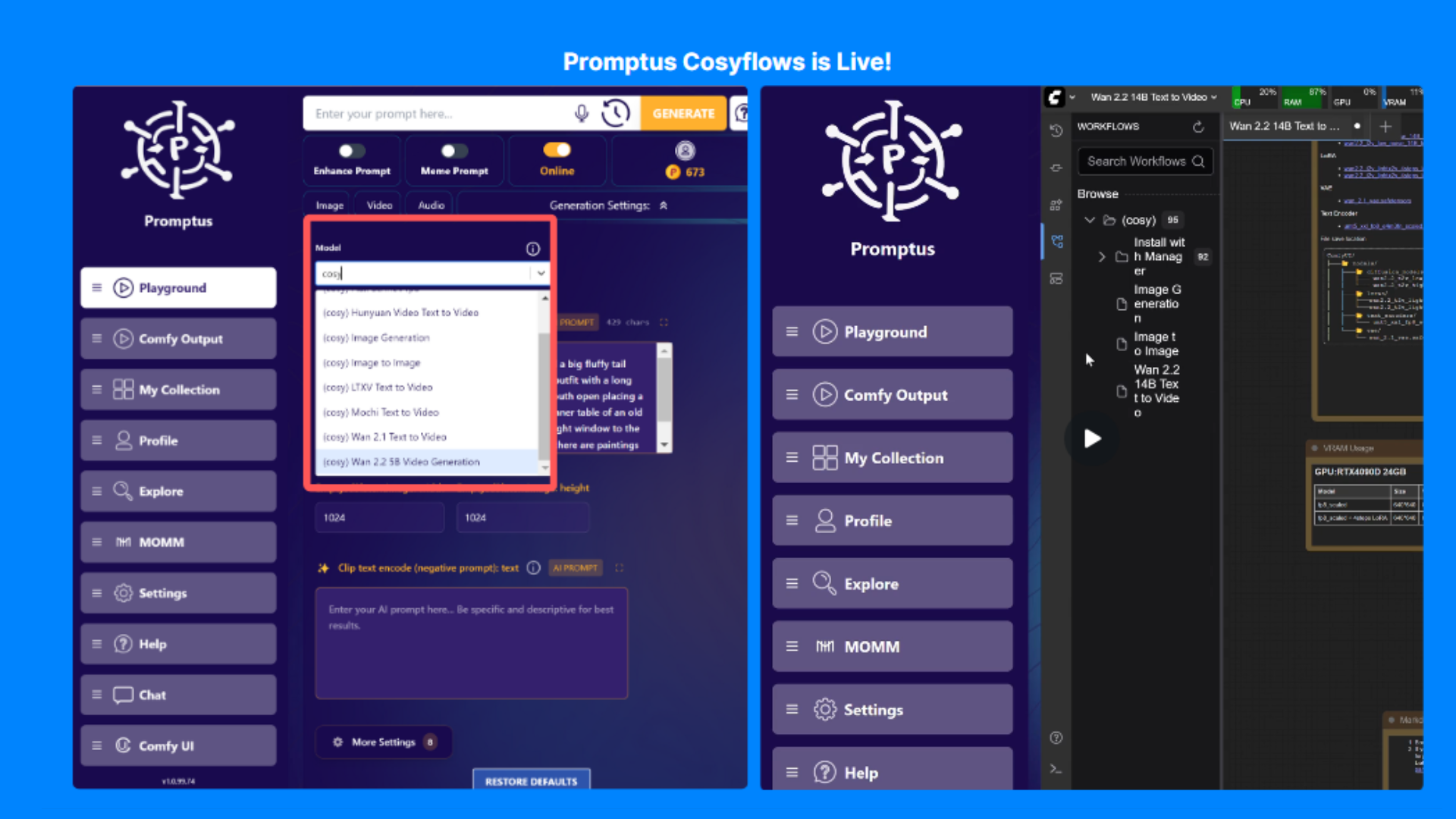

Models vs Templates: Clearing Up a Common Confusion

Many users assume censorship is built into the app. In reality, there are two separate layers:

1. The Template (CosyFlow)

In Promptus, templates like CosyFlows define how images are generated—the workflow, settings, and logic.

If you are using the Promptus Desktop App, the best current CosyFlow for photorealism is:

Flux is not just an upgrade over older Stable Diffusion workflows—it is a generational leap. It handles:

- Human anatomy more accurately

- Natural lighting and exposure

- Fewer artifacts like extra fingers or warped faces

Older “Stable Diffusion” templates still work, but they are increasingly outdated for high-end realism.

2. The Model (The Actual Brain)

The template is the engine—the model is the fuel.

The official Flux.1 [dev] model from Black Forest Labs is one of the most capable open-source image models available today. It adheres to prompts exceptionally well and rivals or surpasses proprietary systems in realism.

However, official models tend to be more conservative in what they allow.

Community-fine-tuned models (often hosted on sites like Civitai) remove many of those guardrails. These models are not “more powerful”—they are simply less restricted.

This distinction is crucial:

- Promptus itself is not “uncensored”

- The censorship level depends entirely on the model you load

Why Flux Became the New Standard

Flux replaced older Stable Diffusion pipelines because it solved some of the biggest technical problems that forced earlier censorship approaches in the first place.

Poor anatomy, visual noise, and unpredictability made earlier models harder to control. Flux’s improved understanding of structure and lighting reduces accidental outputs—and ironically, makes it easier to enforce intentional restrictions where desired.

That’s why many consider Flux the new baseline rather than an edge case.

The Bigger Picture

AI image generators are censored not because they are dangerous by default—but because they are too effective.

When a tool can:

- Instantly create believable reality

- Operate anonymously

- Scale infinitely

…society reacts with constraints.

Local tools like Promptus represent a middle ground: giving creators control without central moderation, while placing responsibility back on the user rather than the platform.

As models improve and norms evolve, censorship will likely shift—but it is unlikely to disappear entirely.

our weekly insights. Join the AI creation movement. Get tips, templates, and inspiration straight to your inbox.

.png)

.avif)